Integration and Differentiation

Questions to David Rotermund

By Jan Wiersig, modified by Udo Ernst and translated into English by Daniel Harnack. David Rotermund replaced the Matlab code with Python code.

Numerical Integration

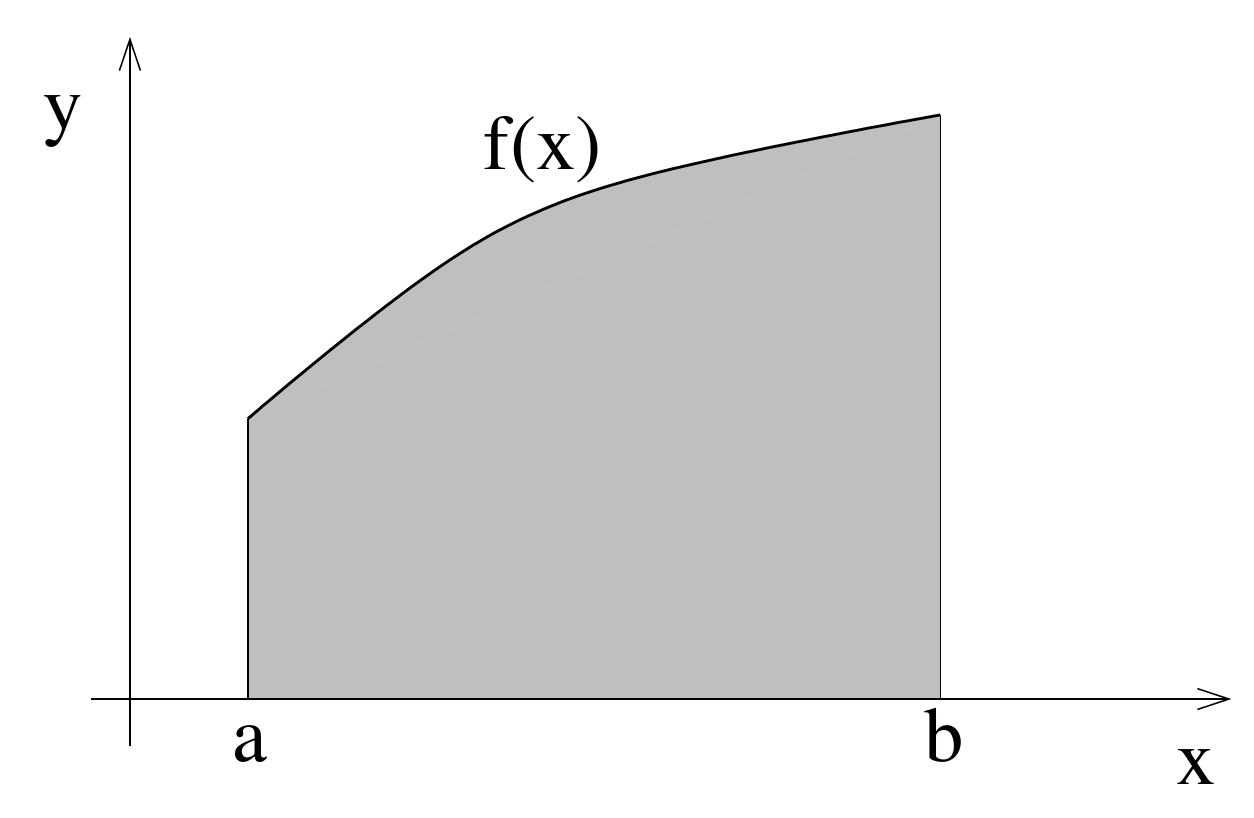

In many cases, the antidervative can not be expressed in closed form via standard functions. This makes numerical methods for the approximation of the antiderivative necessary (‘quadrature’). The intention of this chapter is to introduce and explain the basic concepts of numerical integration. For this, we consider definite integrals of the form

with

Figure 7.1.: Definite integral with boundaries a and b as the area under a curve f(x).

An example for which numerical integration is useful are one-dimensional ordinary differential equations, that can be written as definite integrals:

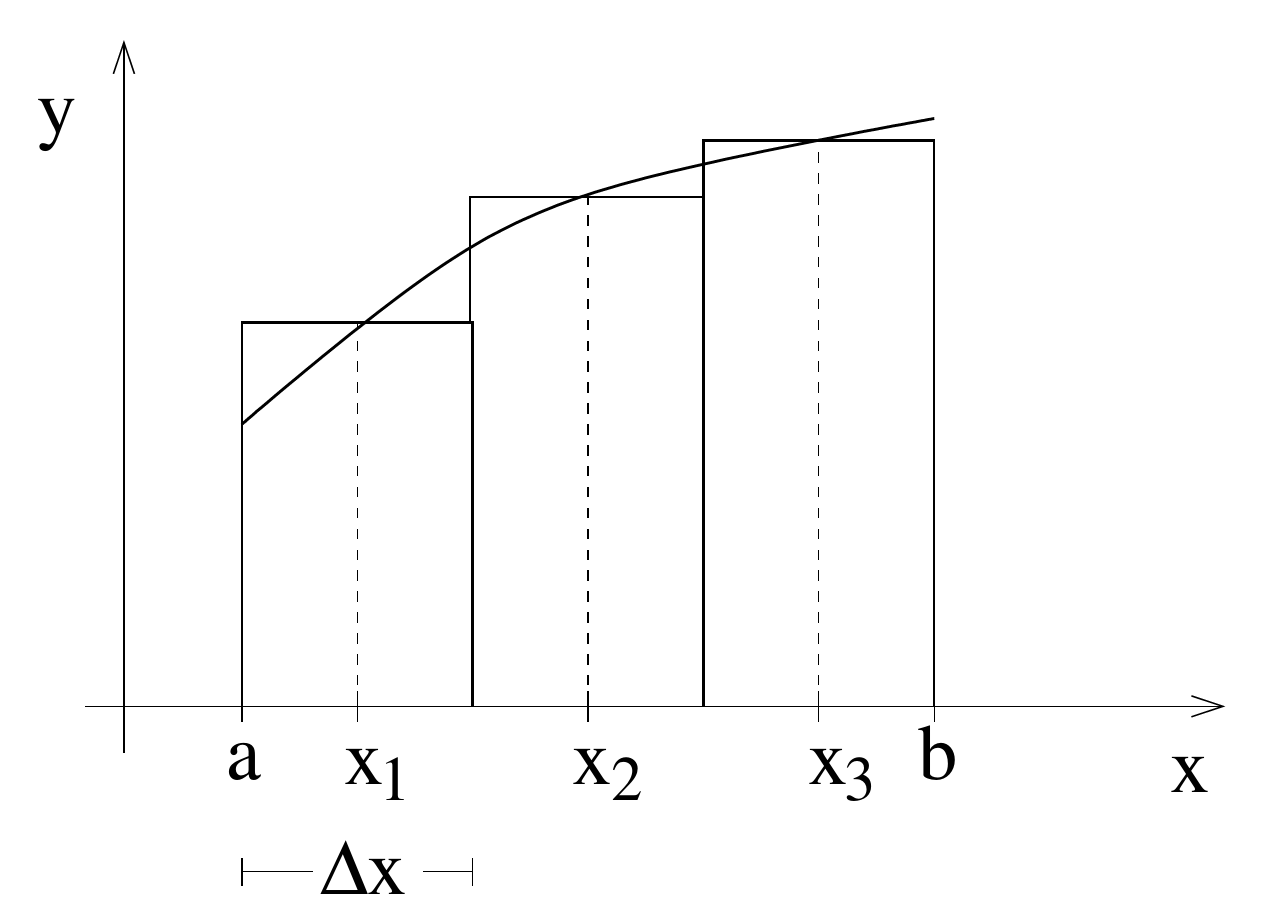

Midpoint Rule

The simplest way of numerical integration is via the midpoint rule, or also rectangle method. Here, the interval of integration is split into sub-intervals as shows in fig. 7.2. The sub-intervals have a width of

Figure 7.2.: Scheme of the midpoint rule.

The integral is then replaced by the Riemann sum:

To illustrate the accuracy of this approximation, consider the following integral of the Gaussian bell-curve

Due to the regular occurrence of this integral in many contexts, the corresponding primitive, the so called ‘error’-function erf

The following table shows the comparison of the exact solution with the result of the midpoint rule for different numbers of supporting points

| 5 | 0.844088 | -0.00138691 |

| 10 | 0.843047 | -0.00034613 |

| 20 | 0.842787 | -0.00008649 |

| 60 | 0.842711 | - |

From the table we can derive that the error is proportional to

The sub-interval

Substitution shows that some terms vanish

Writing now with central derivation

Insertion yields

Taking the sum \(I = \sum_{i=1}^N I_i\) , many terms will vanish, thus

Hence, the error is proportional to

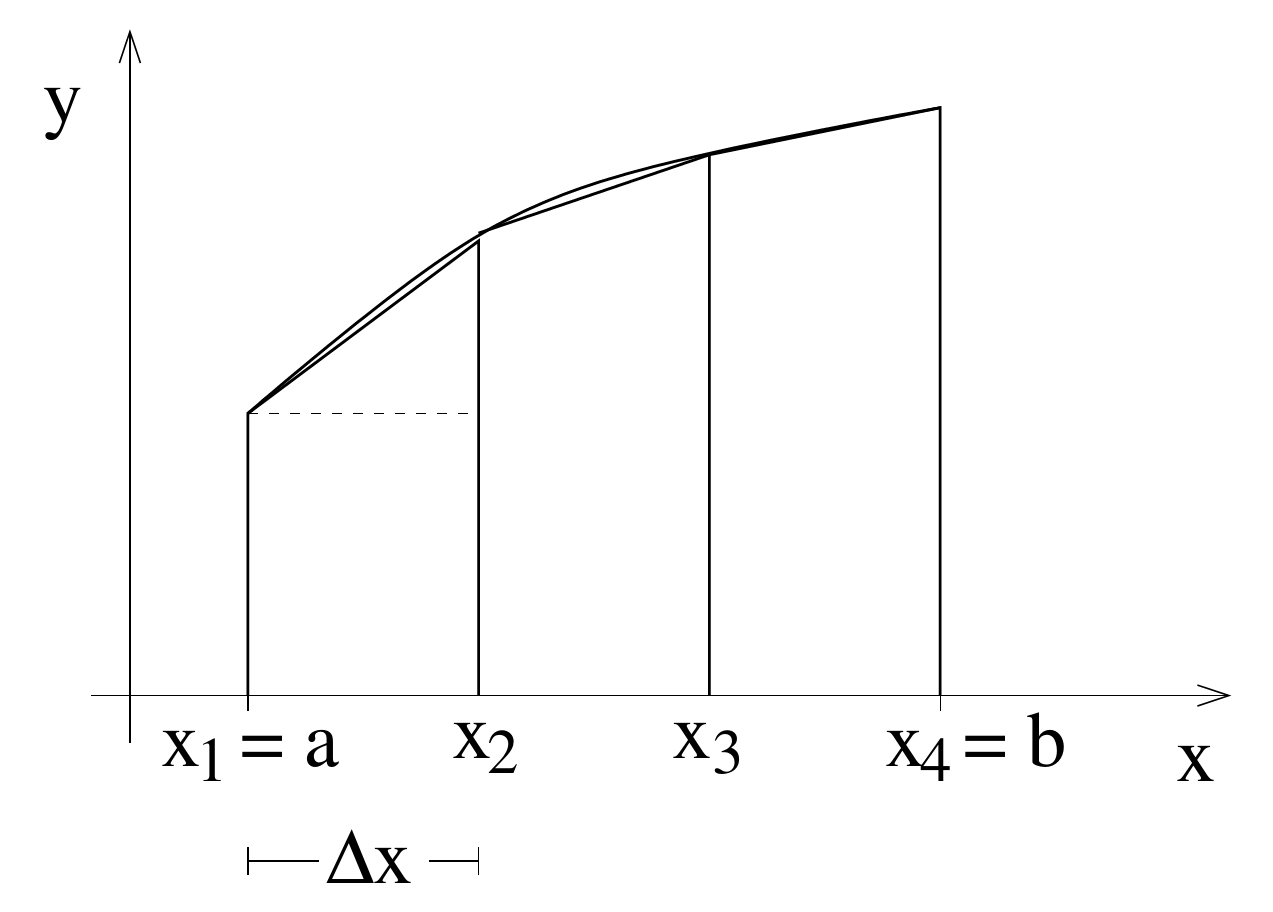

Trapezoidal Rule

Instead of approximating the area by little rectangles, here, trapezoids are used (see fig.7.3).

Figure 7.3.: Scheme of the trapezoid rule. The dashed line illustrates the division of a trapezoid into a triangle and a rectangle.

The width of the sub-intervals is

In the part integral

It follows the ‘compound’ trapezoid rule:

Note that the trapezoid rule contains the boundaries

The error approximation gives, in analogy to the procedure used for the midpoint rule,

Thus, the trapezoid rule is less accurate by a factor of two than the midpoint rule. This is a surprising result, considering the two figures 7.2 and 7.3 which suggest the opposite. The seeming contradiction is resolved when realizing that the procedure of the midpoint rule partly compensates positive and negative deviations from the exact solution.

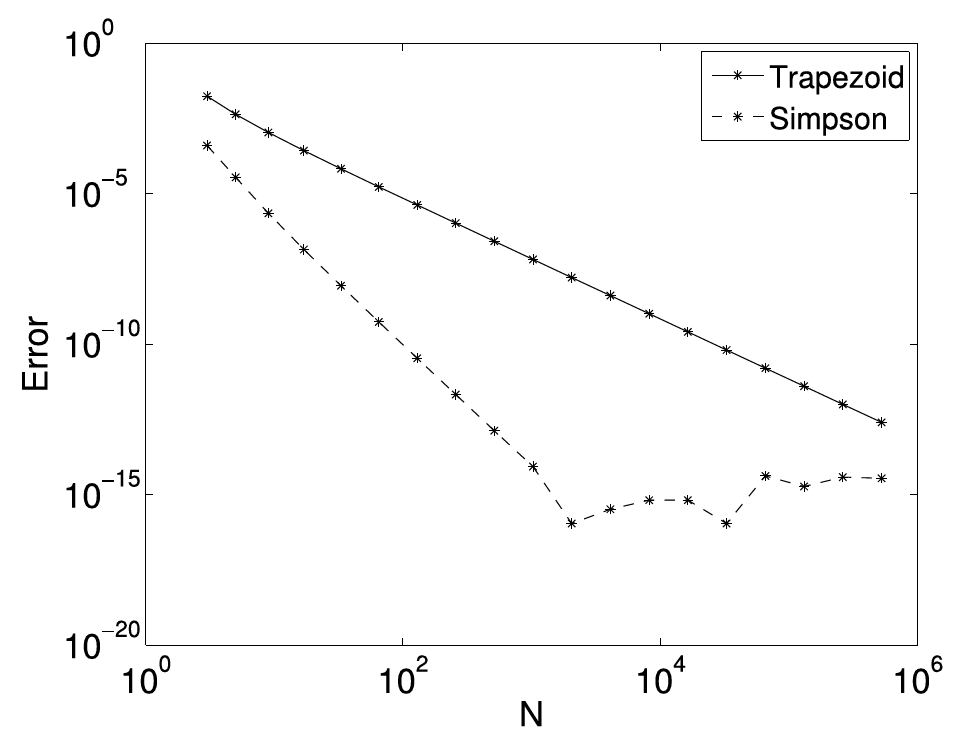

Simpson’s Rule

Simpson’s rule is often used since it is more accurate than the midpoint or the trapezoid rule. The idea behind Simpson’s rule is to approximate the integrand function as a parabola between three supporting points. For this parabola, the integral can be found analytically.

As in the trapezoid rule, the supporting points

The error shall be given without strict derivation:

The error term is of higher order as compared to the trapezoid rule. Thus we expect the error to be much smaller with the Simpson’s rule. Figure.7.4 confirms this expectation. With the same number of supporting points, Simpson’s rule gives far more accurate values for the integral than the trapezoid rule.

Figure 7.4.: Comparison of the absolute errors between the trapezoid rule and Simpson’s rule when evaluating the integral

Integration with Python

Python provides several routines for integration. The most important one for one-dimensional integrals is

scipy.integrate.quad(func, a, b, args=(), full_output=0, epsabs=1.49e-08, epsrel=1.49e-08, limit=50, points=None, weight=None, wvar=None, wopts=None, maxp1=50, limlst=50, complex_func=False)[source]

Compute a definite integral.

Integrate func from a to b (possibly infinite interval) using a technique from the Fortran library QUADPACK.

Here,

import scipy

import numpy as np

# Define the function to integrate

def function(x):

return np.exp(x)

# Perform the integration from 0 to 1

y, _ = scipy.integrate.quad(func=function, a=0, b=1)

print(y) # -> 1.7182818284590453

or

import scipy

import numpy as np

# Define the function to integrate

def function(x):

return 1.0 / (1.0 + x**2)

# Perform the integration from 0 to 1

y, _ = scipy.integrate.quad(func=function, a=0, b=1)

print(y) # -> 0.7853981633974484

Miscellaneous

Further interesting methods and aspects of numerical integration are

- Non-uniformly distributed supporting points:

- adaptive quadrature methods

- Gaussian quadrature

- Improper integrals, i.e. the function or the interval of integration is boundless

- Multidimensional integrals, e.g.

Numerical Differentiation

Many applications demand the approximate differentiation of functions via a numerical method. Finding the analytical differentiation is for example impossible if the function to be differentiated is by itself only given numerically.

Right-sided Differentiation

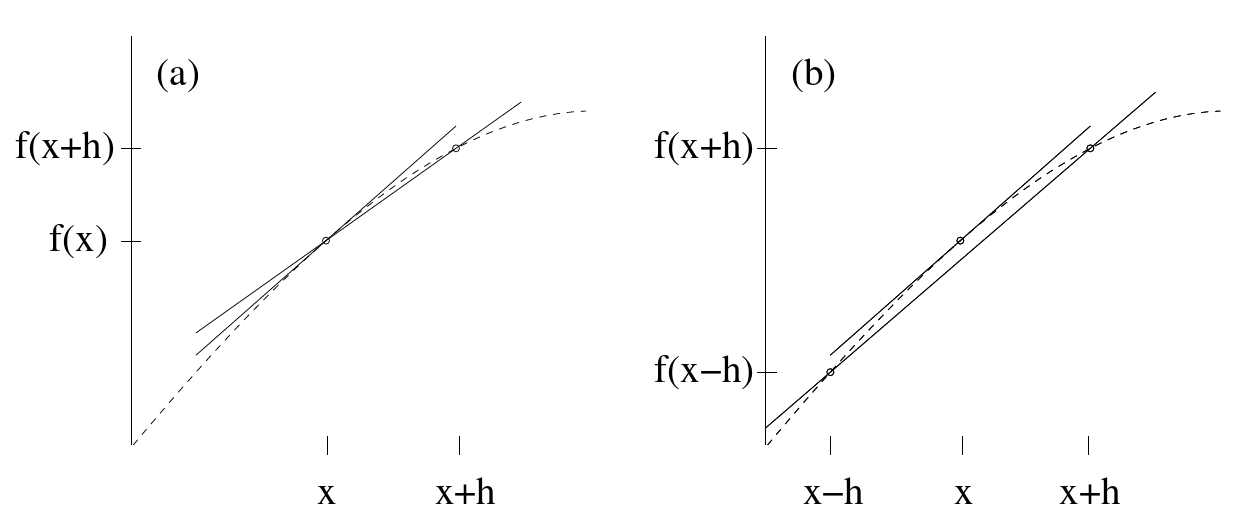

The differentiation of a function

Since

Figure 7.5.: Approximation of the slope of the tangent on the curve

To estimate the resulting approximation error, we consider the Taylor series up to the second order

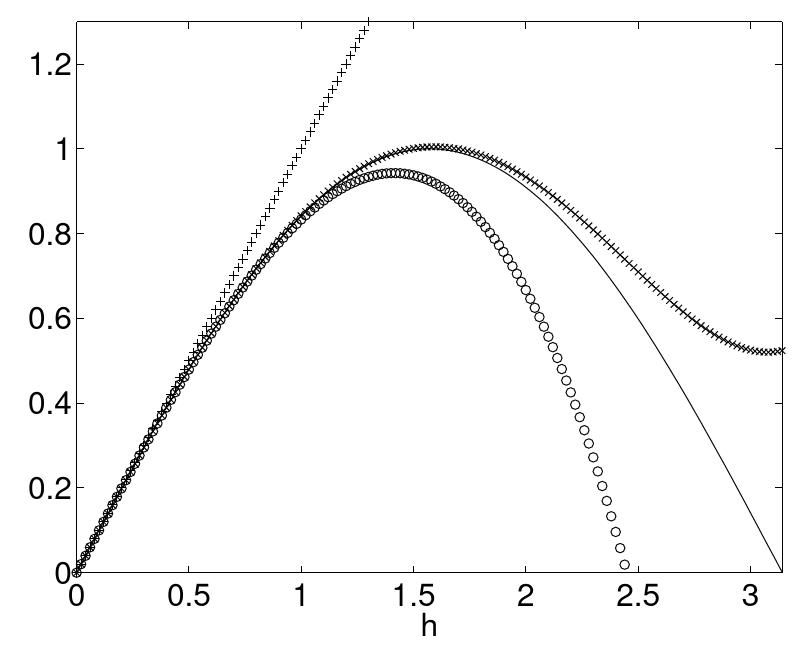

Figure 7.6 illustrates the Taylor series of the sine function at

Figure 7.6.: Sine function (continuous line) and the corresponding Taylor series including the 1. order(+), 3. order (o) and 5. order (x).

Rewriting the above Taylor series results in the relation

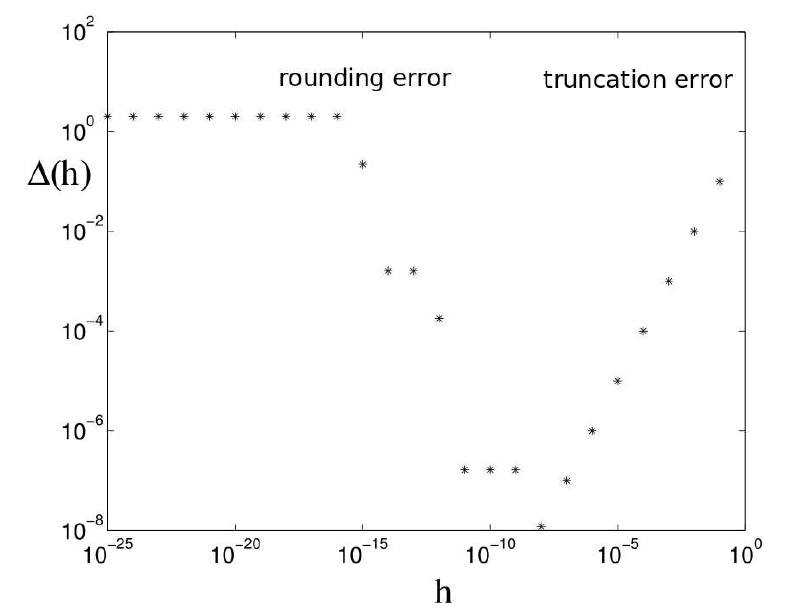

The left hand side is the exact derivative. The right hand side is the right-handed derivative and the approximation error, also called truncation error or discretization error, which is linear in

| $\Delta(h) = \left | f’(x)-\frac{f(x+h)-f(x)}{h}\right | \ ,$ |

as a function of

Figure 7.7.: Total error of the right-sided derivation of

Centered Differentiation

Consider the following Taylor series

Subtraction of both equation gives

The right hand side is the centered first derivation approximation. Note that the truncation error now decreases quadratically with the decrease of the step width

If we add the Taylor series for

The truncation error is also quadratic in

The source code is Open Source and can be found on GitHub.